Google BigQuery

Google BigQuery is a fully managed, serverless data warehouse with built-in machine learning capabilities.

What can you do with the Google BigQuery integration?

As with all of Deepnote's SQL-related integrations, the Google BigQuery integration allows you to query databases and explore the structure of your warehouse. Think "SQL editor" but with all the modern productivity boosters that come with Deepnote's notebook. For example,

- Write native SQL and Python in the same notebook

- Search your entire warehouse instantly via the integrated schema explorer

- Get intelligent autocomplete for columns, tables, and databases

- Interactively explore data without writing any additional code

- Run queries powered by BigQuery DataFrames

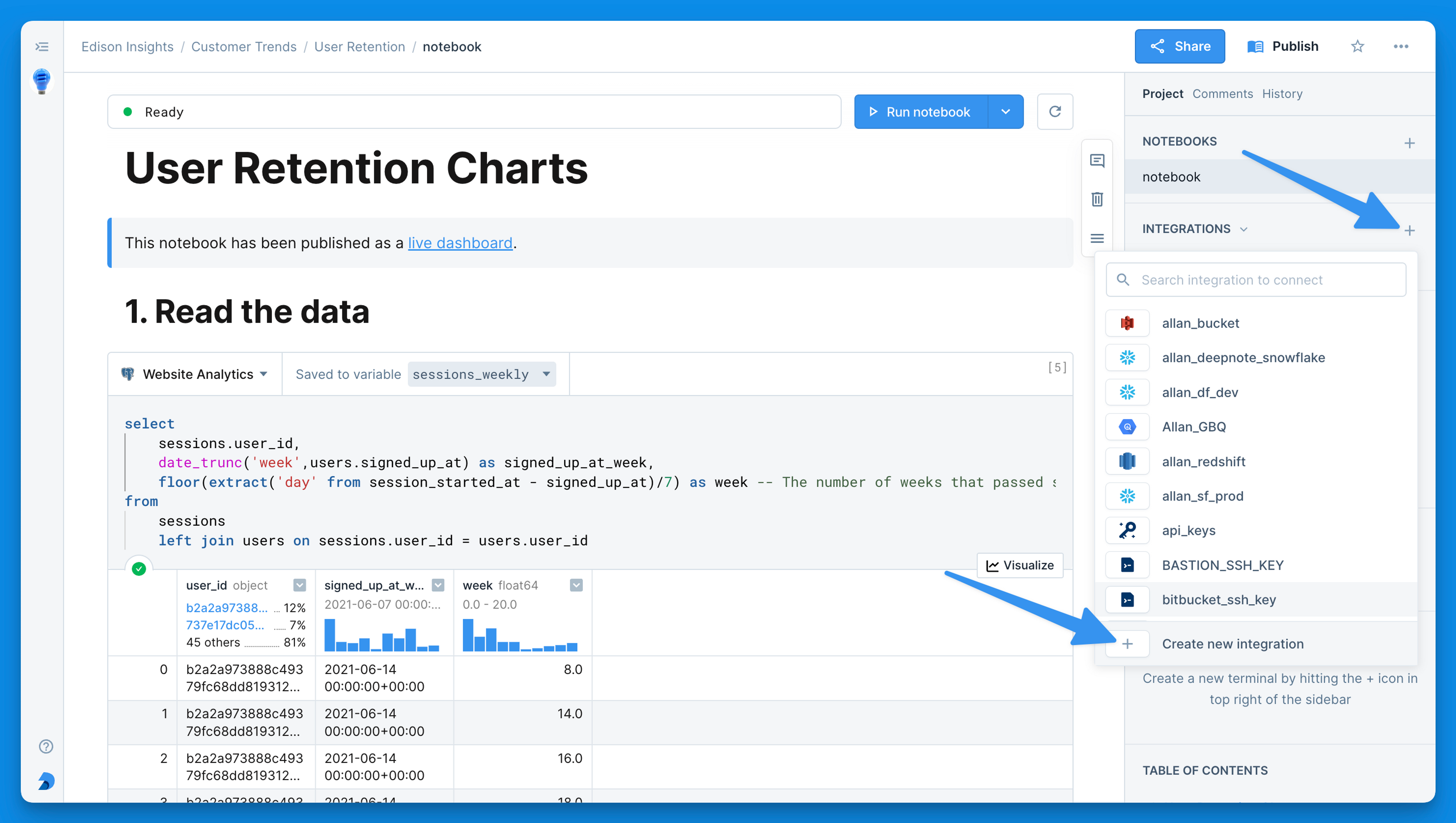

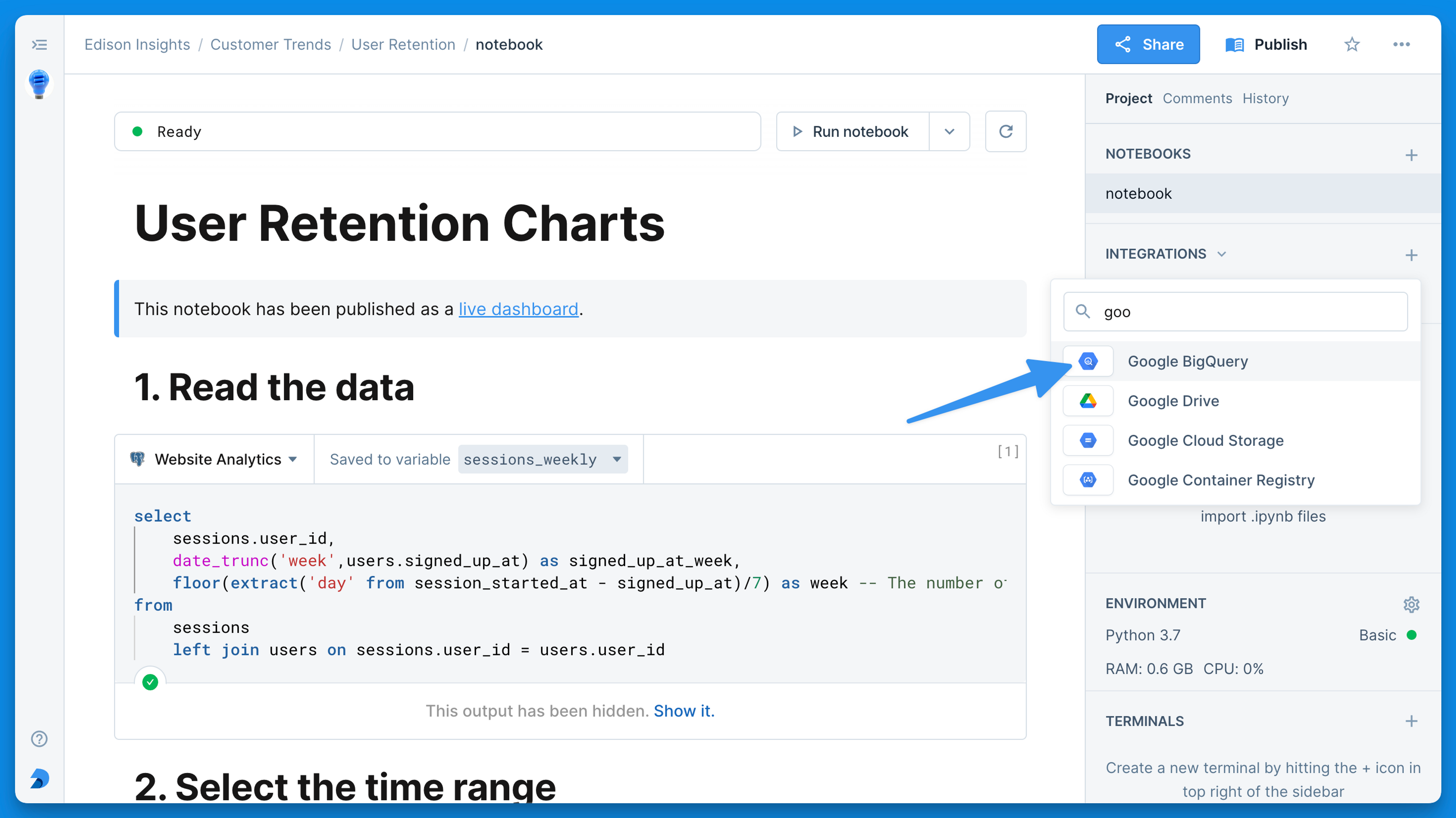

How to connect to Google BigQuery

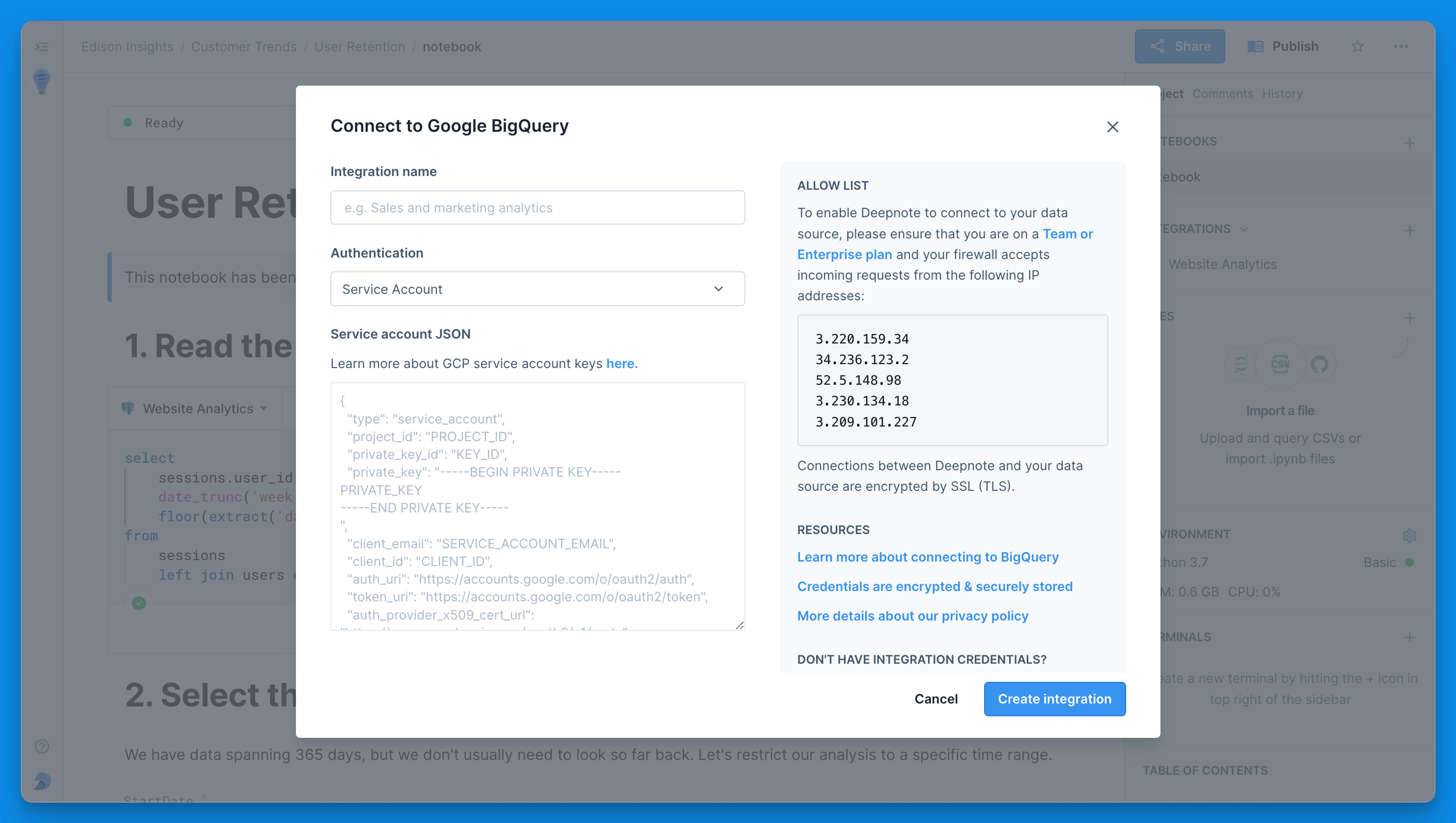

Authenticating with a service account

A service account will provide a shared connection to Google BigQuery. That is, all collaborators with least Editor privileges will be able run queries against databases provisioned in the service account.

To use the service account authentication, you will need to supply a JSON service account key. Click here for a guide on creating a JSON service account key. Your service account key will be encrypted and stored in Deepnote's database.

Authenticating to BigQuery with Google OAuth

With BigQuery's Google OAuth authentication you can give every member of your Deepnote workspace their own set of credentials. This ensures greater security by using short-lived tokens and enabling the use of multi-factor authentication. Follow the principle of least privilege and use granular access control for various BigQuery resources to ensure users can only access the data they need. Click here to learn how to set up BigQuery's Google OAuth authentication in Deepnote.

Working with data from Google BigQuery

Now that you are connected to your Google BigQuery can do the following actions in Deepnote:

-

Click the newly created integration in the Integrations section to open the schema browser. Click here to learn more about the schema browser.

-

Create an SQL block that points to your warehouse and begin querying your data. Autocomplete for columns, tables, and databases will be at your fingertips as you type. Click here to learn more about SQL blocks.

-

Explore column distributions as well as the sorting and filtering capabilities on the results set. All results are displayed as an interactive Pandas DataFrame. Click here to learn about interactive DataFrame output.

-

Pipe the results of your query into a chart block for rapid interactive data visualization. Click here to learn more about chart blocks.

Using pure Python to connect to BigQuery

To go beyond querying (like listing tables, creating datasets, etc.), you may need to use the official Python client library (docs).

Use this code snippet to authenticate the python client using the integration's service account:

import json

import os

from google.oauth2 import service_account

from google.cloud import bigquery

bq_credentials = service_account.Credentials.from_service_account_info(

json.loads(os.environ['INTEGRATION_NAME_SERVICE_ACCOUNT']))

client = bigquery.Client(credentials=bq_credentials,

project=bq_credentials.project_id)

Just replace the INTEGRATION_NAME with an uppercased, underscore-connected name of your integration. If you have trouble finding it, run this one-liner to list environment variables that contain service accounts:

[var for var in os.environ if '_SERVICE_ACCOUNT' in var]

Once the BigQuery client is initialized, you can use it to run queries and materialize the results as dataframes like this:

sql = """

SELECT *

FROM `bigquery-public-data.usa_names.usa_1910_current`

LIMIT 1000

"""

df = client.query(sql).to_dataframe()

``